You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Gravity waves finally detected, Einstein proven right

- Thread starter TURBODERP

- Start date

-

- Tags

- astronomy physics relativity

NonSequtur

a body

To be fair, magic9mushroom explicitly said so: "given the ability to utilise tachyonic signals." It's a pretty hefty given, but it's a typical turn of phrase to introduce an assumption. Perhaps more common in mathematical contexts than others.

I tend not to read casual posts as I do proofs, so I read it as 'since we have tachyons, we know time travel is possible', reinforced by his use of 'demonstratably possible'. If that is a misunderstanding of @magic9mushroom, then I apologise.

Last edited:

TheLastOne

Person of impeccable tastes (for destruction)

This whole thing is an experimental triumph. I'm making a note here: huge success.

You just had to go there, didn't you. You went there. I'm not even angry, I'm being so sincere right now.

the atom

Anarcho-Kemalist Thought Leader

- Location

- Comfortably numb

Wait, so we just witnessed a second Big Bang?Am I the only one squeeing over this?

Favorite part:

The invisible, silent scream of space-time, the echoes of which are just barely detectable from over a billion light years away.

Black Noise

Curious

- Location

- About 1AU from Sol

Do you have gifs for frame-dragging too?

Thanks, fixed in the original post. This is what I get for forgetting conservation of energy and only relying on vague memories of EM field multipole expansion.While it's true that gravitational radiation requires a changing quadrupole moment rather a dipole moment as electromagnetic radiation does, and this definitely affects how hard it is to produce strong waves, the gravitational wave energy is still ∝1/r² and amplitude ∝1/r as usual. The latter is what's important here, as it's directly related to the strain observed by LIGO.

Nah, the tiny part of the big band still accessible (aka the observable universe) was to this event what it was to our sun's second-per-second regular output.

TheBleachDoctor

Secretly a Cat

- Location

- The Plane of Infinite Kittens

- Pronouns

- She/Her

....

Physics is confusing.

Physics is confusing.

- Pronouns

- He/Him

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...It's because of the principle of relativity. If the instantaneous signal is possible in one inertial frame, then instantaneous signaling must be possible in any other inertial frame. Thus (green signal is possible)⇒(yellow signals are possible). Of course, if you throw out the principle of relativity, you could keep causality by e.g. insisting that there is a global reference frame in which the signaling is instantaneous and no other FTL speeds are allowed.

In general, a Lorentz boost on a Minkowski plane acts like a rotation along hyperbolas with lightlike paths as their asymptotes, so you can boost any superluminal speed (including faster-than-infinite, i.e. directed below the spatial axis) into any other superluminal speed. So the principle of relativity would imply that any superluminal speed being possible implies that all of them are.

NonSequtur

a body

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...

It doesn't look like it's moving at 1.8c - this ain't Galilean transformation. It looks like it's moving a bit faster than 0.99c.

- Pronouns

- He/Him

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...

Nope. They will appear to be approaching each other at 0.9something c. Need to find the right equations for that but all you can ever do is add more 9s onto the end. That's what relativity is, you can't ever go faster than c from any perspective.

they don't have to be 9sNope. They will appear to be approaching each other at 0.9something c. Need to find the right equations for that but all you can ever do is add more 9s onto the end. That's what relativity is, you can't ever go faster than c from any perspective.

- Location

- Challenge God? y/n

Nope. They will appear to be approaching each other at 0.9something c. Need to find the right equations for that but all you can ever do is add more 9s onto the end. That's what relativity is, you can't ever go faster than c from any perspective.

Unfair.

- Location

- Wörms, the half continent

This reminds me of a more experimental proof of why 0.9[Recurring] is equal to one. No matter many nines you add, it will be less than one because one is equal to an infinite series of nines.Nope. They will appear to be approaching each other at 0.9something c. Need to find the right equations for that but all you can ever do is add more 9s onto the end. That's what relativity is, you can't ever go faster than c from any perspective.

FaeGlade Plural

Semper Legens

- Location

- Land of Bureaucracy

- Pronouns

- Plural/They

Of course, to add infinite nines, you'd need infinitely more speed (differential), which would need infinite energy. And your frame of reference will always be finite, since your speed can only be finite without infinite energy (and even with, it could only approximate c, which is still not infinite).This reminds me of a more experimental proof of why 0.9[Recurring] is equal to one. No matter many nines you add, it will be less than one because one is equal to an infinite series of nines.

That's the problem with dealing with infinity in physics

magic9mushroom

BEST END.

I tend not to read casual posts as I do proofs, so I read it as 'since we have tachyons, we know time travel is possible', reinforced by his use of 'demonstratably possible'. If that is a misunderstanding of @magic9mushroom, then I apologise.

Maths double major. It was (though I in turn misunderstood your "citation needed" as referring to the logic rather than the premise). Accepted.

- Location

- Challenge God? y/n

Of course, to add infinite nines, you'd need infinitely more speed (differential), which would need infinite energy. And your frame of reference will always be finite, since your speed can only be finite without infinite energy (and even with, it could only approximate c, which is still not infinite).

That's the problem with dealing with infinity in physics

Nah it's really easy, just get infinite energy.

Well, go on. Go get it. Bring it here, in a bag.

Apotheosis

Lucifer

- Pronouns

- She/Her

As I understand, it's not that the energy released by the event was greater than the rest of the visible universe, but that it's power output was greater (i.e. [the amount of energy per the amount of time] was greater).

My physics teacher today told us that the cool thing about this is not the gravitational waves. Those were expected. He told us the cool thing is that the accuracy of LIGO's measurements go beyond what the uncertainty principle would tell us is the limit of accuracy of position over time.

They successfully performed a Quantum non-demolition measurement. Normally in Quantum Mechanics, an extremely accurate measurement of momentum would result in the particle being impossible find (since Delta-X becomes large) and vica-versa. Making it impossible for one to make repeated accurate measurements of any quantity over time. This is an issue as to detect a gravitational wave one needs make continuous measurements of distance. To basically see whether this occurs

What the team successfully did was to find two quantities (in this case phase and number of photons) which allowed to make repeated accurate measurements of the phase change in the photon and therefor the distance while losing information of the number of photons. The light needed to make these sorts of measurements is known as squeezed light.

Quantum nondemolition measurement - Wikipedia, the free encyclopedia

http://www.squeezed-light.de/

They successfully performed a Quantum non-demolition measurement. Normally in Quantum Mechanics, an extremely accurate measurement of momentum would result in the particle being impossible find (since Delta-X becomes large) and vica-versa. Making it impossible for one to make repeated accurate measurements of any quantity over time. This is an issue as to detect a gravitational wave one needs make continuous measurements of distance. To basically see whether this occurs

What the team successfully did was to find two quantities (in this case phase and number of photons) which allowed to make repeated accurate measurements of the phase change in the photon and therefor the distance while losing information of the number of photons. The light needed to make these sorts of measurements is known as squeezed light.

Quantum nondemolition measurement - Wikipedia, the free encyclopedia

http://www.squeezed-light.de/

Last edited:

- Pronouns

- He/Him

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...

This is a badly formed question, firefossil. "0.9c in opposite directions" directly assumes a common reference frame that is not either of the starships (call it the Sun, say); as measured by an observer in that frame the relative velocity of the two starships is 1.8c.

However, from the point of view of either of the two starships, the Sun is moving at 0.9c and the other starship is moving at 0.995c. This owes itself to the fact that under relativity time and distance are both variable, but the speed of light in a vacuum is invariant.

Old Spice Guy

The Man Your Man Could Smell Like

- Location

- Riding a horse, backwards

Einstein gets it right.

Shocking .

.

Shocking

.

.RRoan

Technogaianist

- Location

- 'round a far, distant star

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...

Well, no. Remember, one of the two core axioms of special relativity is that c is invariant. Imagine if Starship B was shining a laser from its front. Would Starship A see that beam of light traveling away from it at 2.8c? No, it would not, because light moves at c and c always appears the same regardless of where you measure it from. Since Starship B's laser must appear to be traveling at c to Starship A, Starship B must therefore appear to be traveling at a velocity which is less than c.

TheBleachDoctor

Secretly a Cat

- Location

- The Plane of Infinite Kittens

- Pronouns

- She/Her

Brain critical does not compute.

Vorpal

Neither a dandy nor a clown

- Location

- 76 Totter's Lane, Shoreditch, London, EC1 5EG

Sorry, fresh out.

If that's the case, doesn't two starships driving at 0.9c in opposite directions result in de facto FTL travel? Because if I'm understanding relativity here correctly, then from the reference frame of starship A, it looks like starship B is moving away at 1.8c...

As foamy says, you're assuming that the separation speed in some inertial frame matches their relative speed in the inertial frame comoving with one of the ships, but this is incorrect.

To continue the prior analogy, if on a Euclidean plane you have several Cartesian coordinate axes sharing the same origin, S = (x,y), S' = (x',y'), and you have the following situation: the x'-axis makes slope m with respect to S, and some line make slope n with respect to S', what is the slope of that line with respect to S? Well, it's actually simple if you know that slope is the tangent of the angle to the x-axis: you just add the corresponding angles (say m = tan α and n = tan β), giving a Euclidean slope addition formula

m⊕n = tan(α+β) = (tan α + tan β)/(1 - tan α tan β) = (m+n)/(1-mn),

which is dual to the Lorentzian velocity addition formula following hyperbolic trigonometry:u⊕v = tanh(α+β) = (tanh α + tanh β)/(1 + tanh α tanh β) = (u+v)/(1+uv).

So 0.9⊕0.9 = 0.9945. In physics, the hyperbolic angle in spacetime is called 'rapidity'.(I like to plug this analogy primarily because of hearing too much 'oh noes STR doesn't make sense and is inconsistent, paradox paradox paradox'. This treatment makes it plain that STR, at least in 1+1 dimensions, makes as much sense as and is equiconsistent with basic Euclidean coordinate geometry most people learn in school. Well, n+1 dimensions is fine too; it's just less obvious.)

That's a misunderstanding. In general, if someone tells you that they beat a fundamental principle of quantum mechanics, take it with a huge grain of salt. What's actually going on is that in the context of interferometry, the term 'standard quantum limit' refers to a limit based on statistical averaging over N particles without taking into account any quantum correlations, to give a limit of Δφ ≈ 1/√N. It is basically a shot noise model, with the 'quantum' part just recognising that the light is made of particles.My physics teacher today told us that the cool thing about this is not the gravitational waves. Those were expected. He told us the cool thing is that the accuracy of LIGO's measurements go beyond what the uncertainty principle would tell us is the limit of accuracy of position over time.

Thus, it is an idiosyncratic name that does not refer to the Heisenberg uncertainty principle at all. The interpretation you propose wouldn't even be self-consistent, because squeezed light by definition saturates the Heisenberg uncertainty limit, but is still consistent with it. Taking into account quantum correlations, the actual Heisenberg limit would be Δφ ≳ 1/(2N) or something like that. In interferometry, this is sometimes called the 'sub-quantum limit', but again the name just idiosyncratic; no actual beating of fundamental quantum principles is present.

The QND formalism is also is also mathematically equivalent to the usual bog-standard projective measurement once the environment is explicitly considered. That doesn't mean it's not useful; actually, it is very useful in dealing with an open quantum system that interacts with its environment in unknown ways. But let's be real here: it's a clever re-framing of the situation that makes things more convenient to deal with, not an overturning of fundamental principles.

That's not what I really say (or at least mean) in the rest of the quote. What we've succeeded in doing is beating what was conventionally thought to be the limit of measurement over time as one of the consequences of the uncertainty principle.

It is true that all this is consistent with the uncertainty principle at any particular measurement. What people had difficulty doing previously is doing repeat measurements of such nature. It's not as if the uncertainty principle says anything directly about accuracy over time anyway.

Last edited:

Hotdog Vendor

Author of 'Sisters of Rail'

- Location

- (5 + 3 * sqrt(5)) / 8

- Pronouns

- She/Her

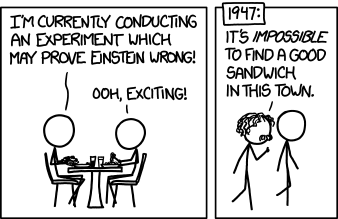

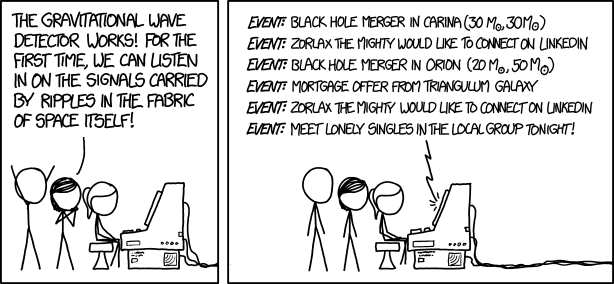

Einstein was right should be a meme. Considering how people want to prove him wrong and keep failing.

And of course the latest:

Vorpal

Neither a dandy nor a clown

- Location

- 76 Totter's Lane, Shoreditch, London, EC1 5EG

Ok, I get you. But to me it seems odd to call it that because the uncertainty principle just says that ΔNΔφ ≥ 1/2, and everything else is an assumption on the state, while the actual limit set by the uncertainty principle is smaller than the shot noise limit—and frequently called the 'Heisenberg limit' in the literature, too.That's not what I really say (or at least mean) in the rest of the quote. What we've succeeded in doing is beating what was conventionally thought to be the limit of measurement over time as one of the consequences of the uncertainty principle.

So it may be better to say that LIGO did more precise interferometry than what is possible with laser light (since that has Poisson statistics and so follows the shot noise limit). I think this refocusing on the role of the state from the start makes things clearer, because we can see directly the relevance of (phase-)squeezed light: one can make Δφ smaller as long as one engineers the light to have a higher uncertainty in photon number. Or vice versa, e.g. state with definite photon number, but those are obviously not useful here.

Last edited: