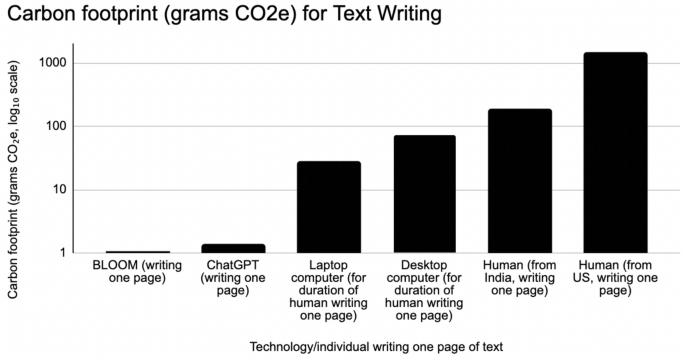

Assuming that a person's emissions while writing are consistent with their overall annual impact, we estimate that the carbon footprint for a

US resident producing a page of text (250 words) is approximately 1400 g CO2e. In contrast, a resident of India has an annual impact of 1.9 metric tons

22, equating to around 180 g CO2e per page. In this analysis, we use the US and India as examples of countries with the highest and lowest per capita impact among large countries (over 300 M population).

In addition to the carbon footprint of the individual writing, the energy consumption and emissions of the computing devices used during the writing process are also considered. For the time it takes a human to write a page, approximately 0.8 h, the emissions produced by running a computer are significantly higher than those generated by AI systems while writing a page. Assuming an average power consumption of 75 W for a typical laptop computer

23, the device produces 27 g of CO2e

24 during the writing period. It is important to note that using green energy providers may reduce the amount of CO2e emissions resulting from computer usage, and that the EPA's Greenhouse Gas Equivalencies Calculator we used for this conversion simplifies a complex topic. However, for the purpose of comparison to humans, we assume that the EPA calculator captures the relationship adequately. In comparison, a desktop computer consumes 200 W, generating 72 g CO2e in the same amount of time.